WELCOME TO

BLOODSTONE SOLUTIONS INC.

Advising companies, organizations and governments on the digital infrastructure (DI) they need to

supercharge research, development and operational improvement,

enable innovation, competitive advantage and global excellence.

MARK DIETRICH

Bloodstone Solutions Inc. was founded by Mark Dietrich, a leader in creating globally competitive DI and advising the companies, innovators and vendors that rely on DI capabilities.

INSIGHTS

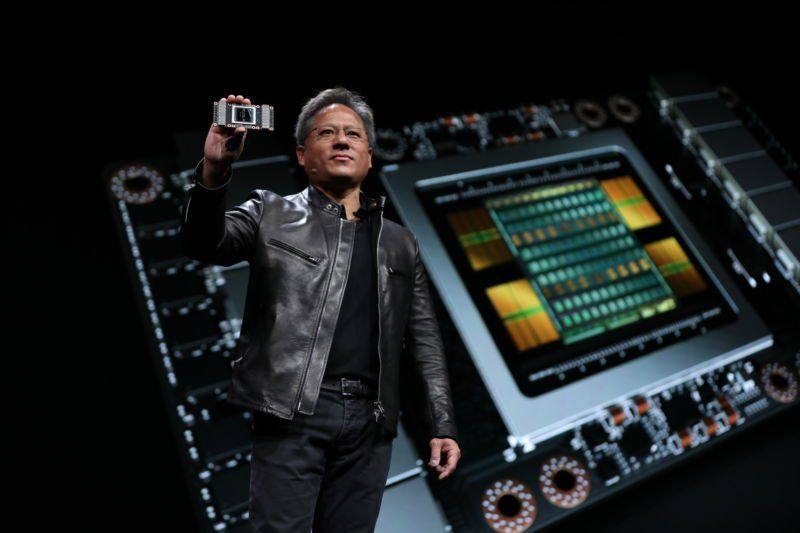

Oh, sorry, maybe you hadn’t gotten the memo, but yes, we seem to have come to the end of Moore’s Law. This became very clear throughout this past week’s Supercomputing 2018 conference in Dallas, TX (the 30th of these annual conferences). Maybe this hasn’t reached your newsfeed because it’s not really news – MIT Technology Review discussed some of the implications of this back in 2016 (foreshadowing several current initiatives including architectural innovation). Maybe it’s not in your newsfeed because the evidence is still mixed (e.g. this article in the November 2018 issue of IEEE Spectrum ), but you really need to think through the implications of this for your business. Back at home, the laptops and desktops we buy today aren’t really that much more powerful or that much cheaper compared to last year. (I have to buy one soon, and my wallet is feeling very disappointed!) At the top end, the very latest, most powerful supercomputers are, well, very expensive and very big, harnessing very large numbers of processors. Today’s #1 in the Top500 is Summit , at the Oakridge National Laboratory (ORNL) in Oak Ridge TN. It has over 27,000 NVidia Volta GPUs and 9,000 IBM Power9 CPUs (image above) for total computational horsepower of over 200 Petaflops/s -- that’s 200,000,000,000,000,000 double precision floating point calculations per second! Summit takes up 5,600 square feet of data centre space, weighs 340 tons, and consumes 13 megawatts of power. You probably didn’t notice the end of Moore’s Law, because improvements in the architecture of your laptops (memory organization, graphics cards, faster communication, etc.), and the software that runs on them, have enabled apparent increases in performance and functionality. In the same way, purchasers of the fastest supercomputers have experimented with the same kinds of architectural innovation, albeit on a much larger scale – enabling some absolutely stunning scientific achievements .

SC18, supercomputing’s largest annual conference and exhibition, starts this weekend in Dallas. Many sessions will address important challenges for Digital Infrastructure (DI) providers around the world. For example: Demonstrating the impact of DI investments and DI-enabled research Showcasing how DI is essential not only to academic research, but to significant industrial sectors around the world Best practices for sustaining and maintaining critical research software after its initial creation and release Integrating data services into the complete DI ecosystem Effectively managing scientific workflows (note: this is just one of several interesting sessions on this topic!) Historically SCXX conferences have focussed on hardware – from design to performance improvement – and there will be plenty of nuts and bolts presentations, topped off by the announcement of the November 2018 edition of the Top 500 list. After that’s announced, I’ll be updating my national compilation of HPC capabilities in academia and research (stay tuned!). Of course my work with Compute Canada since 2014, and then benchmarking DI ecosystems around the world over the last year, underscores how “petaflops” aren’t the only important measure of the strength of your Digital Infrastructure ecosystem. For example, what about creating a “Data500” – a rigorous census of persistent research data storage capabilities around the world? Data sets and dataset sizes both continue to grow exponentially – and storage costs are becoming significant components of DI budgets. The real value of supercomputing lies not in the hardware, but in the data that is produced by that hardware, so we need to start measuring data capabilities as well as compute capabilities. As another example, specialized compute architectures are increasingly being used to solve important DI problems -- like bioinformatics and molecular dynamics -- more quickly than the general-purpose systems that the Top500 measures. The HPCG benchmark incorporates a number of the calculations the community needs – and has highlighted how poorly many general purpose systems actually perform on real-world scientific workloads – and how widely this performance varies across existing architectures. HPCG also assumes the system being tested can operate as a general-purpose computer – so more specific measures would be needed to assess specialized architectures. Many sessions touch on important aspects of this challenge -- here are just a few: Reconfigurable systems and benchmarking reconfigurable systems Programming for diverse architectures Optimizing memory architectures These activities will enable new solutions to very important scientific challenges, solutions that could significantly change the DI landscape -- and the productivity of research & development. Stay tuned for updates on this groundbreaking work!

Oak Ridge National Laboratory (ORNL) is the new home of the world’s fastest computer, Summit – arguably the first “almost exascale” computer clocking in at over 200 petaflops of calculation horsepower (able to perform 200 quadrillion floating point operations per second). But ORNL’s head of Advanced Computing, Jeff Nichols, said over the summer that “in a few years we won’t be talking about exascale any more.” With governments around the world just now pouring billions into exascale computing programs, what could Nichols be talking about? One explanation comes from looking at how specialized hardware architectures, like GPUs, FPGAs and ASICs, are achieving amazing performance improvements for specific types of calculation, such as Fast Fourier Transforms, a mathematical operation on time series data that is fundamental to many analysis and simulation techniques. Massive speedup of FFT and similar operations could enable game-changing performance improvements for key analysis and modelling applications – shorten times to solution for large categories of DI users, and reduce the demand for general purpose exascale systems like Summit. This is not science fiction – it’s been happening for years. Here are some examples: