Oh, sorry, maybe you hadn’t gotten the memo, but yes, we seem to have come to the end of Moore’s Law. This became very clear throughout this past week’s Supercomputing 2018 conference in Dallas, TX (the 30th of these annual conferences). Maybe this hasn’t reached your newsfeed because it’s not really news – MIT Technology Review discussed some of the implications of this back in 2016 (foreshadowing several current initiatives including architectural innovation). Maybe it’s not in your newsfeed because the evidence is still mixed (e.g. this article in the November 2018 issue of IEEE Spectrum ), but you really need to think through the implications of this for your business.

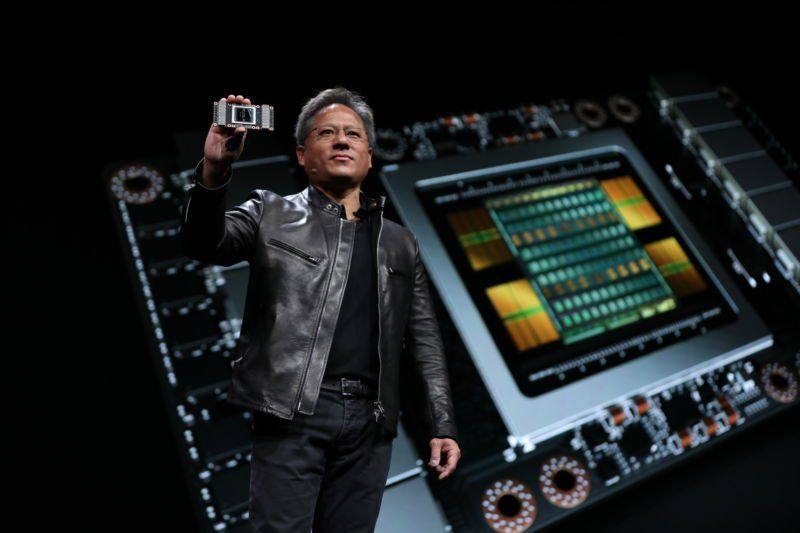

Back at home, the laptops and desktops we buy today aren’t really that much more powerful or that much cheaper compared to last year. (I have to buy one soon, and my wallet is feeling very disappointed!) At the top end, the very latest, most powerful supercomputers are, well, very expensive and very big, harnessing very large numbers of processors. Today’s #1 in the Top500 is Summit , at the Oakridge National Laboratory (ORNL) in Oak Ridge TN. It has over 27,000 NVidia Volta GPUs and 9,000 IBM Power9 CPUs (image above) for total computational horsepower of over 200 Petaflops/s -- that’s 200,000,000,000,000,000 double precision floating point calculations per second! Summit takes up 5,600 square feet of data centre space, weighs 340 tons, and consumes 13 megawatts of power.

You probably didn’t notice the end of Moore’s Law, because improvements in the architecture of your laptops (memory organization, graphics cards, faster communication, etc.), and the software that runs on them, have enabled apparent increases in performance and functionality. In the same way, purchasers of the fastest supercomputers have experimented with the same kinds of architectural innovation, albeit on a much larger scale – enabling some absolutely stunning scientific achievements .

SC18, supercomputing’s largest annual conference and exhibition, starts this weekend in Dallas. Many sessions will address important challenges for Digital Infrastructure (DI) providers around the world. For example:

- Demonstrating the impact of DI investments and DI-enabled

research

- Showcasing how DI is essential not only to academic

research, but to significant industrial sectors around the world

- Best practices for sustaining and maintaining critical research

software after its initial creation and release

- Integrating data services into the complete DI ecosystem

- Effectively managing scientific workflows

(note: this is just one of several interesting sessions on this topic!)

Historically SCXX conferences have focussed on hardware – from design to performance improvement – and there will be plenty of nuts and bolts presentations, topped off by the announcement of the November 2018 edition of the Top 500 list. After that’s announced, I’ll be updating my national compilation of HPC capabilities in academia and research (stay tuned!).

Of course my work with Compute Canada since 2014, and then benchmarking DI ecosystems around the world over the last year, underscores how “petaflops” aren’t the only important measure of the strength of your Digital Infrastructure ecosystem.

For example, what about creating a “Data500” – a rigorous census of persistent research data storage capabilities around the world? Data sets and dataset sizes both continue to grow exponentially – and storage costs are becoming significant components of DI budgets. The real value of supercomputing lies not in the hardware, but in the data that is produced by that hardware, so we need to start measuring data capabilities as well as compute capabilities.

As another example, specialized compute architectures are increasingly being used to solve important DI problems -- like bioinformatics and molecular dynamics -- more quickly than the general-purpose systems that the Top500 measures. The HPCG benchmark incorporates a number of the calculations the community needs – and has highlighted how poorly many general purpose systems actually perform on real-world scientific workloads – and how widely this performance varies across existing architectures. HPCG also assumes the system being tested can operate as a general-purpose computer – so more specific measures would be needed to assess specialized architectures.

Many sessions touch on important aspects of this challenge -- here are just a few:

- Reconfigurable systems

and

benchmarking reconfigurable systems

- Programming for diverse architectures

- Optimizing memory architectures

These activities will enable new solutions to very important scientific challenges, solutions that could significantly change the DI landscape -- and the productivity of research & development. Stay tuned for updates on this groundbreaking work!

Oak Ridge National Laboratory (ORNL) is the new home of the world’s fastest computer, Summit – arguably the first “almost exascale” computer clocking in at over 200 petaflops of calculation horsepower (able to perform 200 quadrillion floating point operations per second). But ORNL’s head of Advanced Computing, Jeff Nichols, said over the summer that “in a few years we won’t be talking about exascale any more.” With governments around the world just now pouring billions into exascale computing programs, what could Nichols be talking about?

One explanation comes from looking at how specialized hardware architectures, like GPUs, FPGAs and ASICs, are achieving amazing performance improvements for specific types of calculation, such as Fast Fourier Transforms, a mathematical operation on time series data that is fundamental to many analysis and simulation techniques. Massive speedup of FFT and similar operations could enable game-changing performance improvements for key analysis and modelling applications – shorten times to solution for large categories of DI users, and reduce the demand for general purpose exascale systems like Summit.

This is not science fiction – it’s been happening for years. Here are some examples:

Major DI providers in both the US and Canada have quantified how research that uses DI techniques and tools has a significantly greater impact than research that does not use these techniques. DI can on average enable increased research impact, as measured by FWCI, by a factor of almost 2, as reported by Compute Canada

, or of over 4, as reported by XSEDE

. Both analyses were each based on over 15,000 articles in science journals, strongly demonstrating the importance of DI in today’s R&D.

In Canada, where Compute Canada is the primary provider of DI, Compute Canada conducted a bibliometric analysis of research outputs self-reported by over 2,300 faculty users of the facility. In total they reported 35,666 research contributions that were enabled by Compute Canada between 2011 and 2016, which is 47% of the total reported by those users. 25,759 of the enabled contributions were articles in science journals, and, of those, the impact of 15,874 could be quantified using bibliometric tools provided by SciVal .

This analysis showed that using Digital Infrastructure almost DOUBLED the impact of the resulting research. The actual bibliometric statistic is called the field-weighted citation index (FWCI) (see more about that here ) – and an FWCI of 1.93 means that those articles were cited on average almost twice as often as papers in the same field (worldwide). In some fields using DI techniques was associated with FWCIs of roughly 5. The chart below illustrates field-by-field results.

In the Budget 2018, announced last February, the Canadian government made a bold promise to invest $572.5 million in digital infrastructure that would support and grow the vibrant research and innovation sector across Canada. The Government also promised to work with stakeholders to develop a “Digital Research Infrastructure Strategy” that would explain how to use this money to “deliver open and equitable access” to researchers across the country.

As CEO of Compute Canada through late 2017, I was heavily involved in advising the government in advance of the Budget. Obviously I can’t share specifics, but the advice was very detailed and reflected significant and broad stakeholder input. However it is clear that Canada’s DI community couldn’t come up with an organizational design that would satisfy everyone. This is why the Budget talks about how “the strategy [would] include how to incorporate the roles currently played by the Canada Foundation for Innovation , Compute Canada and CANARIE .”

Defining the services that go into Digital Infrastructure, and the organizations needed to deliver them, is a difficult topic around the world. Bloodstone just completed a study (future posts will share more details of our results) of exactly this question across eight countries (plus one region of countries), and there were almost as many organizational patterns as there were countries in that study. Of course this study wasn’t exhaustive -- two dozen countries have made large enough investments in DI (focusing on academia and research) to appear in the Top500 list – but it was broad enough to offer some useful lessons. Here are a few of the top ones:

-

Focus on, and collaborate with, the best researchers. Of course, this is a platitude, and every DI organization will “say” this is their priority. However, it is also very easy for DI service providers to fall into the “shared services” IT trap, prioritizing efficiency over effectiveness, and prioritizing the 80% over the 20%. This approach will alienate the most demanding researchers, who will then go their own way, while the rest of the community feels held back, rather than enabled, by their DI resources. Instead, DI providers need to collaborate with those most demanding researchers to ensure that the DI ecosystem stays at the leading edge and allows the best researchers to amplify their reach and achievement. (By the way, the leading edge does NOT necessarily mean having the biggest systems!)

-

Tame the leading edge so that the 80% can use it too. Leading edge technology is diverging instead of converging, the “middleware” continues to evolve, and – most importantly -- the problems your researchers are trying to solve are, by definition, “novel”. Bottom line, the landscape keeps changing and HAS to keep changing, so DI providers have to build and maintain intermediate solutions and interfaces so that the less demanding 80% can be effective and productive without having to keep up with all this change. Many important components in the DI ecosystem, such as scientific gateways and platforms, research asset management capabilities and research software devops, are critical to enabling researcher productivity in the face of this rapid change. These activities must be sustainably resourced if most researchers are going to experience the benefit of other DI investments (e.g. in compute or storage).

-

Invest in ALL the components of the DI ecosystem in a balanced, sustained way. Sustainability is more than predictable capital infusions. It also means making sure the power bill gets paid. It means creating stable career paths for research software experts and then making sure they are working to maintain and improve both the analysis codes and the middleware and gateways. It means investing in cybersecurity training and procedures. It means rewarding documentation and testing – from metadata annotation and code documentation, to reproducibility initiatives. It means creating standards that enable interoperability.

Of course, you will say that even these few lessons conflict with one another – for example, if we’re serving our most demanding researchers, they won’t want a penny spent on interoperability! (I didn’t say it was easy!)

I look forward to the government’s promised DI strategy – and I hope it will reflect some of these lessons!